This edition of the Parallel Haskell Digest kicks off with some warm feelings from BioHaskeller Ketil Malde

Blessing of the day goes to the authors of threadscope. In fact, let me look it up: Donnie Jones, Simon Marlow, and Satnam Singh. I had an old STM experiment lying around, but couldn't quite make it go faster than the single threaded version.

Today I installed threadscope, and a glance at the output sufficed to identify the problem, and now at least it's faster. Great tool! (But you all knew that, of course)

Thanks indeed to the original authors of ThreadScope! Ketil made good use of the original ThreadScope. Since the original release, the folks at Well-Typed have taken over development of ThreadScope (Andres, Duncan, and Mikolaj) and are working to extend it so that it becomes even easier to squash those pesky performance bugs. These extensions, much like this digest, are made possible by the Parallel GHC Project. We hope you'll love the results.

News

Data Parallelism in Haskell slides and video: Manuel Chakravarty came all the way from Sydney (that's 931 km!) to tell the Brisbane FP Group about work going on at UNSW on data parallel programming in Haskell. The talk covers a lot of ground. It's an hour and a half long and

[It] motivates the use of functional programming for parallel, and in particular, data parallel programming and explains the difference between regular and irregular (or nested) data parallelism. It also discusses the Repa library (regular data parallelism for multicore CPUs), the embedded language Accelerate (regular data parallelism for GPUs), and Data Parallel Haskell (nested data parallelism for multicore CPUs).Both video and slides are available, so check it out!

Word of the Month

The word of the month is dataflow as in dataflow parallelism. (And if

you've just seen Manuel's talk, not to be confused with data

parallelism). With dataflow, we will be wrapping up our now four-part

series on parallel programming in Haskell. In recent words of the month,

we've been looking at various approaches for parallelising your way to

faster code. We started with the parallel arrays library Repa, then

moved on to the Haskell par and pseq primitives, and built from

there to get Strategies. Now we'll be looking at monad-par, a library

for dataflow parallelism.

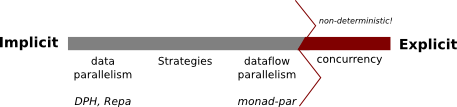

First, the bigger picture: why do we have another way of doing parallel programming in Haskell when we already have parallel arrays and Strategies? Part of the answer is parallelism is still a research topic, with new ideas coming out from time to time and some old ideas slowly withering away. For another part of the answer, it may help to think in terms of a trade-off between implicitness and performance, in other words, between Easy and Fast.

Not all problems are subject to the trade-off in the same way, and it's not always clear to what extent they are. As a rough sketch, problems that require applying the same operation on a large number of simple object can get fast performance from a parallel arrays library. This is a form of data parallelism. If the problem does not lend itself to data parallelism, the next port of call is likely Strategies. If Strategies are not adequate for the desired speedups, the problem may require an even more explicit approach.

Until recently, this meant “rolling your own” parallelism by using Concurrent Haskell: forking off threads, assigning tasks to them, and communicating finished work between them. Using concurrency for DIY parallelism is risky. It means venturing into IO, giving up determinism, exposing ourselves to all manner of side-effects, not to mention subtle concurrency bugs like deadlock. As Haskellers, we should demand better: safer, more predictable and easier to reason about. We want to have explicit fine-grained control without all the nasty side-effects. And now we can have it!

The latest addition to the parallel Haskell quiver is the monad-par library. Programming in monad-par looks a lot like programming in Concurrent Haskell:

data Par a instance Monad Par runPar :: Par a -> a fork :: Par () -> Par () data IVar a new :: Par (IVar a) put :: NFData a => IVar a -> a -> Par () get :: IVar a -> Par a

Instead of using forkIO, we use fork to create threads (not sparks!)

and instead of using MVar's to communicate, we use IVar's. MVar and

IVar behave in somewhat similar ways, in that any attempt to read from

an IVar will wait until it has been filled. But this is also where

differences emerge. Unlike their concurrent counterparts IVar may only

be written to once; subsequent writes result in an error. This aligns

with our desire for determinism. We never have to worry about the IVar

changing values over time. Moreover, the Par monad does not allow for

any IO (feature! not a bug) and computations in the Par

monad can always be extracted with a simple runPar.

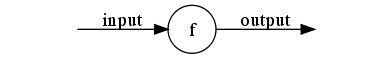

But this doesn't really tell us all that much about how to use this library. For now we come back to our word dataflow. The dataflow model treats program as a little black box, an input goes in, an output comes out, and what happens in between is immaterial:

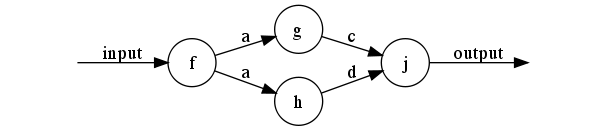

The program is represented as a directed graph (a dataflow network),

with each node representing an individual operation (black boxes in

their own right), and connections between the nodes expressing

dependencies. In the graph above, g and h depend on the output of

f (the same output - there is only one) and in turn, i depends on

the output of both g and h.

Dataflow networks are handy thinking tools because one of the things

that makes it hard to get fast parallel code is the inherent

sequentially that dependencies introduce. A dataflow network nicely

captures which parts of the program are parallel (g and h are

independent of each other) and which parts are sequential (g depends

on f). This is a sort of parallelism topline; we can't go any faster

than what this network allows, but at the very least we should take the

shape of the network into account so we don't make matters worse and

find ourselves waiting a lot more on dependencies than is strictly

necessary.

Using the Par monad essentially consists in translating dataflow

networks into code.

Suppose we have functions

f :: In -> A g :: A -> B h :: A -> C j :: B -> C -> Out

For the graph above, we might say:

network :: IVar In -> Par Out

network inp = do

[vf,vg,vh] <- sequence [new,new,new]

fork $ do x <- get inp

put vf (f x)

fork $ do x <- get vf

put vg (g x)

fork $ do x <- get vf

put vh (h x)

x <- get vg

y <- get vh

return (j x y)

It's useful to note that here dataflow graphs need not be static; they may be dynamically generated to fit the shape of the input. To see this and also a more realistic taste of monad-par, check out Simon Marlow's Parallel and Concurrent Programming Haskell tutorial. It shows how a parallel type inferencer might be implemented, its dataflow graph arising from the set of bindings it receives as input.

Finally what about Strategies? How do we choose between the two? Both

are IO-free and deterministic, both require some but not a whole lot of

comfort with monads. Par may be easier to use correctly than

Strategies because it is strict and forces you to explicitly delineate

the dependencies in parallel computations. This also makes it more

verbose on the other hand. Aside from being more verbose, Par can be a

little bit “messier” than Strategies because you don't get the same

separation between algorithm and parallelism. And since we're ultimately

concerned with performance, it's worth knowing that the Par monad

currently involves more overhead than the Eval monad from Strategies.

The latter may be more appropriate at finer granularities.

Perhaps one way to go about this is to start off with Strategies as they

have been around longer and may require less invasive modifications to

your code. But if you find Strategies to be difficult, or you just can't

get the performance you want, don't reach for those forkIO's yet. Give

Par a try.

For more about monad-par, do visit Simon's tutorial and the API on hackage. To dig deeper, you may also find it instructive to read A Monad of Deterministic Parallelism (Marlow et al) presented in this year's Haskell Symposium. Related to this are his CUFP monad-par slides tutorial slides.

This concludes our tour of current approaches to Haskell parallelism. There is some new technology on the horizon which we may cover in a later digest, particularly Data Parallel Haskell and nested data parallelism (data parallelism not to be confused with dataflow parallelism), but for next month, let's take a little break from parallelism and look at something a little different.

Parallel GHC Project Update

The Parallel GHC Project is an MSR-funded project, run by Well-Typed, with the aim of demonstrating that parallel Haskell can be employed successfully in real world projects.

This has been a month for releasing software. We've recently put on Hackage updates to ThreadScope and some helper libraries

- ThreadScope (0.2.0), with a new spark profiling visualisation, bookmarks, and other enhancements

- gtk2hs (0.12.1), adding GHC 7.2 compatibility,

- ghc-events (0.3.0.1), adding support for spark events (needs GHC 7.3),

Duncan Coutts presented our recent work on ThreadScope at the Haskell Implementors Workshop in Tokyo (23 Sep). He talked about the new spark visualisation feature which show a graphical representation of spark creation and conversion statistics with a demo inspired by a program we are working on for Parallel GHC.

Meanwhile, we have also completed a pure Haskell implementation of the “Modified Additive Lagged Fibonacci” random number generator. This generator is attractive for use in Monte Carlo simulations because it is splittable and has good statistical quality, while providing high performance. The LFG implementation will be released on Hackage when it has undergone more extensive quality testing.

Blogs, Papers, and Packages

First Class Concurrency in Haskell (PDF) (31 Aug)

Keegan McAllister posted slides from a talk he gave last year at the Boston Area Haskell Users' Group. His slides give an overview of topics in concurrent imperative programming:

- Using closures with concurrency primitives to improve your API

- Using MVar to retrieve thread results

- How System.Timeout works

- Implementing IORef and Chan in terms of other primitives

- Implementing the π-calculus, and compiling the λ-calculus to it

You might find Keegan's slides to be a useful introduction to Haskell Concurrency.

Lambda to pi (9 Sep)

“If the λ-calculus is a minimal functional language, then the π-calculus is a minimal concurrent language”. Following up on his recent slides, Keegan wrote a follow-up post elaborating on his pi-calculus interpreter and lambda-calculus to pi-calculus compiler. If you want to run his blog post just pass the lhs file to GHCi.

(note that I had to use

-hide-package=monads-tf)

Sirkle (17 Sep)

Morten Olsen Lysgaard released Sirkle. It provides an implementation of the Chord Distributed Hash Table, and a simple storage layer with fault tolerance and replication similar to DHash. The Sirkle DHT implementation uses Cloud Haskell, which we saw some interest about in the last edition of this digest. Sirkle is available on hackage and GitHub. “If you think distributed computing is fun”, Morten invites us, “and would love a hobby project, join me in discovering what can be done with a DHT in Haskell!”

ThreadScope (5 Sep)

Duncan Coutts released ThreadScope 0.2.0, the performance visualiser that we saw Ketil used to make his STM experiment faster. The updated ThreadScope has a new spark profiling visualisation, bookmarks, and other enhancements. Now if only we had some sort of user documentation…

Mailing list discussions

Performance of concurrent array access (23 Aug)

As a first step to his foray into writing concurrent parallel Haskell programs Andreas Voellmy wrote a bit of code to read that reads and writes concurrently to an IOArray. Unfortunately, the performance with multiple cores was not very good and using ThreadScope did not reveal anything obvious. What did help on the other hand was to switch to IOUArray, which puzzled Andreas. Why would this improve scalability to multiple threads and cores? Andrew Coppin and Brandon Allbery suggested it may have something to do with strictness. Johan Tibbell pointed out some places where Andreas would likely want to add a bit of strictness to his original IOArray version. But this only seems to have helped slightly. Anybody else have an idea?

(Repa) hFlush: illegal operation (24 Aug)

Michael Orlitzky is using Repa to process MRI data, but when writing the results to file, he gets the error message

spline3: output.txt: hFlush: illegal operation (handle is closed)Trial and error is a bit hard to do here because it takes 45 minutes to get to the point of failure. Ben Lippmeier offered to have a look if Michael could provide some data to go with the code he posted. Ben also pointed out that the implementation of Repa's text IO functions is naive, and may have problem with massive files. If the data is in some standard binary form, it may be worthwhile to write a Repa loader for it.

how to read CPU time vs wall time report from GHC? (14 Aug)

Wishnu Prasetya is just getting started with Parallel Haskell. He's having some trouble interpreting runtime statistics from the RTS (eg.

8.97s ( 2.36s elapsed)).SPARKS: 5 (5 converted, 0 pruned) INIT time 0.02s ( 0.01s elapsed) MUT time 3.46s ( 0.89s elapsed) GC time 5.49s ( 1.46s elapsed) EXIT time 0.00s ( 0.00s elapsed) Total time 8.97s ( 2.36s elapsed)

Why does the CPU time on the left exceed the wall clock time on the right? Iustin Pop pointed out that CPU time accumulates over the multiple CPUs. The roughly 4:1 ratio suggest Wish must be making 95% use of 4 CPUs (or partial use of more CPUs). This may sound efficient except that at the end of day what counts is the difference in wall clock times. Iustin points out that the MUT/GC times show Wish spending 60% of his time in garbage collection, perhaps a sign of a space leak or an otherwise inefficient algorithm.

Can't link OpenCL on Windows (12 Sep)

OpenCL (Open Computing Language) is a framework for writing parallel programs that run on GPUs (more generally, on heterogeneous systems with perhaps a mix of CPUs and other processors). Jason Dagit is trying to get the OpenCLRaw bindings to work on Windows. On link time, however, he gets undefined symbol errors for everything he uses in the OpenCL API. Mystery solved: Jason later reported that the binding was set to use ccall whereas OpenCL uses stdcall.

Turn GC off (14 Sep) and Build local-gc branch of GHC (26 Sep)

Andreas Voellmy (who we saw above with IOArray woes) is writing a server handling multiple long-lived TCP connections with a bit of shared state among connections. Ideally, it'd be a case of more cores = more clients, but the current stop-the-world garbage collection in GHC makes this expensive. His first thought was to completely disable garbage collection, and just run until he is out of memory. Perhaps there is an alternative.

David Barbour commented that controlling the amount of live memory is important here, much more than avoiding allocations. Austin Seipp pointed us to recent work on the local-gc branch of GHC (see the paper Multicore Garbage Collection with Local Heaps). This work has not been merged into GHC though (as it's not clear if the improvements are worth the complexity increase).

Andreas decided to give it a shot. Unfortunately, he can't get it to build. Help?

A Missing Issue on Second Generation Strategies (24 Sep)

Burak Ekici is trying to parallelize RSA encryption and decryption with Strategies, but all his sparks keep getting pruned! Antoine Latter noticed that Burak's strategy wasn't actually doing anything to its input; it simply sparks off computations (themselves evaluated with

rdeepseq) that nobody ever looks at. Daniel Fischer made a similar point, providing some improved code with a refactor and clean up of repeated code along the way.

Parallel Haskell Digest 5 followup

Following up on the last word of the month (Strategies). Kevin Hammond observed that the first

parMapexample interweaves behavioural and functional code, defeating the point of strategies. The issue here is pedagogical: although I later follow up with the version usingparList rseq, I run the risk of people simply copy and pasting while missing out on the benefits. Thanks for the feedback, Kevin! I'll see if I can avoid the try-the-wrong-way-first style in the future.As a more general note, feedback people give, particularly criticism and suggestions for improvement are very valuable, so keep it coming, folks!

Stack Overflow and Reddit

- Concurrent reading and writing to IOArray in Haskell

- What's the best way to write some semaphore-like code in Haskell?

- Help understanding MVar example in Haskell

- How to exit from Hackstack Server App?

- The most useful error message evar! : haskell

- New IBM CPU has transactional memory built in : haskell

Help and Feedback

If you'd like to make an announcement in the next Haskell Parallel

Digest, then get in touch with me, Eric Kow, at

parallel@well-typed.com. Please

feel free to leave any comments and feedback!