Hello Haskellers!

Did you see Ambassador Peyton Jones in Scala land? Simon was recently at ScalaDays 2012 (a large gathering for professional Scala users) giving a keynote talk on Cloud Haskell (one hour video). Cloud Haskell is a pretty exciting new development in the Haskell space, providing the beginnings of a story for distributed programming in Haskell. It's also one of the areas we're focused on over the Parallel GHC project, building a new implementation to replace the current prototype. We're looking forward to talking a bit more about Cloud Haskell in the next (and final) edition of the digest.

Wait, did I say just final? Indeed, by the next digest, we'll be wrapping up the Parallel GHC project. In addition to a bit more Cloud Haskell material, we'll give a little recap of the things we and our partners worked on over the two years. It's been fun!

Meanwhile, in this penultimate edition, we'll be taking a look at

concurrent channels for our word of month. We also have

new parallel Haskell book to look forward to, an update to Accelerate,

the new meta-par family of packages to look at, and also a lot of

recent activity on StackOverflow.

News

-

Loren Davis and 7 other students have been accepted to the Google Summer of Code project under Haskell.org. Loren will be working to implement concurrent thread-safe mutable hash-table. Congratulations and best of luck to Loren, Aditya, David, Mark, Mikhail, Phillip, Shae, and Shayan. Hope it's a fun summer!

Lectureship in Computer Science - Saint Andrews

Kevin Hammond urges us to consider applying for this lecturship in functional programming (closing date 22 June) at the University of Saint Andrews: We seek lectureship applications from researchers who have a strong research background and excellent publication record in any area of functional programming, complementing and enhancing the existing research team, which has a strong focus on parallel programming models and implementation, resource-aware functional programming, dependent type systems, refactoring, static analysis, and performance modelling, and deep connections with the Haskell community.

O'Reilly book on Parallel and Concurrent Haskell (17 May)

Haskell is getting a third O'Reilly book! Joining the introductory Real World Haskell, and web-oriented Haskell and Yesod, will be a forthcoming book on parallelism and concurrency in Haskell (tentative completion date March 2013). Simon Marlow will building this book off his CEFP 2012 tutorial tutorial. He's “really keen for this to be a book that will be useful to people both learning about parallelism and concurrency in Haskell, and coding stuff for real-world use.” Please let him know if you have suggestions for topics or application areas you'd like covered.

Conferences

Erlang Workshop (due 3 Jun, workshop 14 Sep)

Haskell has been borrowing from Erlang lately (Cloud Haskell!). John Hughes suggests, “Why not adapt some cool Haskell ideas to Erlang too?” The Eleventh ACM SIGPLAN Erlang Workshop will take place this year in Copenhagen, Denmark on the tail end of the ICFP. Just two weeks to go!

Facing the Multicore-Challenge III (19-21 Sep)

And if ICFP isn't enough for you, how about this Conference for Young Scientists, just a few days after? The Hochschule für Technik, will hosting the third multicore-challenge conference in Stuttgart, Germany, from 19-21 September. It aims to combine new aspects of multi-/manycore microprocessor technologies, parallel applications, numerical simulation, software development and tools. Contributions are welcome from all participating disciplines. Particular emphasis is placed on the support and advancement of young scientists.

Word of the month

This month, we'll be taking a short breather in our exploration of the

Haskell concurrency space, and fleshing out some of the uses for the

tools we already have. In the past two digests, we saw how Haskell

provides locks for low-level concurrency, and the vastly safer

transactions for concurrency at a higher level. Both approaches give

us the notion of a typed mutable variables, the idea being that an

MVar Int would hold a locked integer, whereas a TVar Int would hold

instead hold transactional reference to an integer. These variables can

hold arbitrarily complex things of arbitrary type; you could have

anything from a TVar Char to a TVar Customer (where Customer would

be some record you've defined in your application).

Now that we have mutable variables, it's worth thinking a bit harder

about what we might actually put into them. Suppose you find yourself in

a typical producer/consumer scenario, for example, with a web service

that automatically marks student essays, and which is broken into a

piece that accepts submissions (producer) and which passes them on to

the core essay-marking engine (consumer). So the producer generates

essays and the consumer eats them up and does some work on them; how do

we get them talking to each other? It's not enough to just use a single

TVar because we want the producer to be able to continue cranking out

essays whilst the consumer is working, rather than waiting for it to

finish. We assume here that essay-marking is a fairly clever and

computationally expensive process, and for this reason, we would want

some kind of backlog that the producer can tack things on to, and the

consumer can pull things off of.

As such, our word of the month is channel. The unbounded channel

abstraction is something that you can fairly easily implement out of

either the locky MVar's or transactional TVar's, but we'll focus on

the latter as transactions are just so much more civilised (though the

same concepts would mostly apply). In the STM world, channels look a

little like the following:

-- Control.Concurrent.STM.TChan data TChan a newTChan :: STM (TChan a) writeTChan :: TChan a -> a -> STM () readTChan :: TChan -> STM a

In the same fashion as the TVar's that we introduced last time,

TChan's are parameterised with a type variable, meaning that you could

have a channel of characters with TChan Char, or a channel of

customers with TChan Customer, and so forth. Creating, reading, and

writing to a channel are all transactions (i.e., in the the STM monad).

Revisiting our essay marking service, we can sketch out how these

channels might be used:

import Control.Concurrent.STM.TChan

main :: IO ()

main = do

chan <- newTChan

forkIO (producer chan)

forkIO (consumer chan)

forever $ return ()

producer :: TChan Essay -> IO ()

producer chan = forever $ do

essay <- magicalWebFrameworkStuff

atomically $ writeTChan chan essay

consumer :: TChan Essay -> IO ()

consumer chan = forever $ do

essay <- atomically $ readTChan chan

mark essay

mark :: Essay -> IO ()

mark essay =

putStrLn "Let me think..."

-- State-of-the-art marking technology,

-- just $25000 per site license

randomRIO (1, 10000000) >>= threadDelay

pass <- randomIO

if pass

then putStrLn "Pass, good job!"

eles putStrLn "Fail!"

And that's it! Using concurrent channels does not get more complicated

or deeper than this. You may have noticed that in this particular

example, we have not really gained (or for that matter lost) that much

from sticking to the transactional version of channels. Using the locky

MVar version would basically consist of dropping the atomically's,

importing from Control.Concurrent.Chan, and using Chan instead of

TChan.

Now that we have a bit of an idea what channels are about, it could be

worthwhile to consider what it really offers over simpler alternatives.

For example, in the introduction we rejected the idea of just using a

single TVar because this would force our producer and consumers to

wait on each other for each and every essay, rather than going about

their asynchronously merry ways.

So we know we want something like channels, but how exactly do we go

about building them? For starters, wouldn't we get a channel structure

by just wrapping Data.Sequence.Seq with a single TVar? It could be

made to work as we are using STM (it simply wouldn't work if we were

using MVar's instead; consider the empty channel), but it would leave

us with the unfortunately inability to simultaneously read from and

write to the channel. These operations would have to grab a hold of the

whole queue, leaving the other to retry until later. It would a little

sad not to enable this bit of concurrency, considering that that reading

and writing take place at opposite ends of the queue, the reader walking

along trying to keep up with the writer.

Instead of naively wrapping a queue, the current implementation uses a

sort of linked list with TVar'ed cons cells and TVar's pointing to

both the beginning (the read end) and the end of the list (the write

end). Here are the data structures that make up a channel:

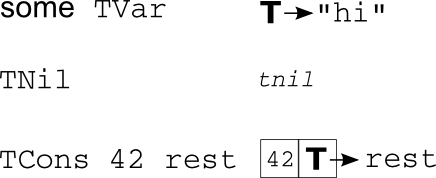

type TVarList a = TVar (TList a)

data TList a = TNil | TCons a (TVarList a)

data TChan a = TChan (TVar (TVarList a)) -- read end

(TVar (TVarList a)) -- write end

It can be a little bit tricky to think about because we've got TVar's

wrapping around things that eventually wrap around TVar's themselves.

It's a whole chain of TVar's, and if you can have a TVar a, there's

no reason not to have a TVar (TVar a). If that feels a bit shaky, try

implementing channels yourself as a quick little exercise. We'll speed

things along with a handful of pictures to illustrate how it might work.

First, our visual language for talking about TVar'ed cons cells:

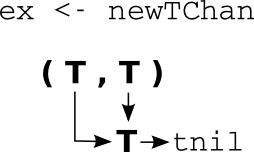

A new channel has three TVar's, one for the linked list (it points to

TNil), and a pair of read/write ones pointing to this pointer:

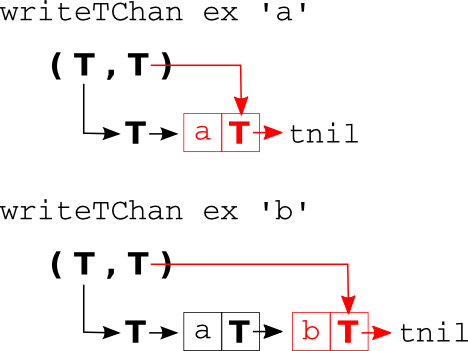

Writing the channel involves adding to the list and moving the write pointer to the new tail of the list:

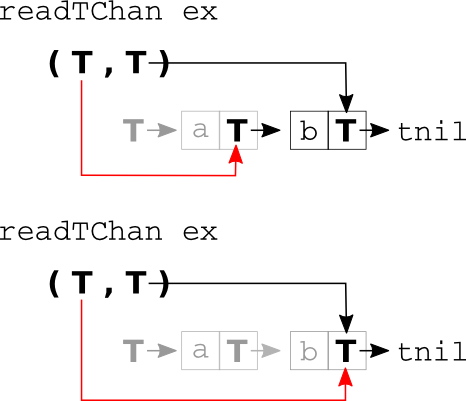

And finally reading those items off the channel involves moving the read pointer forward:

The implementation should be fairly straightforward from the pictures,

although one place you might get stuck when trying to read from an empty

channel. After all, how do you return a value from a channel that

doesn't have any, especially since you're expected to return plain old

a instead of Maybe a? Well, sometimes you just gotta wait. We

briefly glossed over this in our taste of STM in the

last word of the month, but STM

offers a retry function simply causes a transaction to be aborted and

tried again. Using this notion of blocking, you should be able to get a

readTChan that waits until there is something to be read.

Hopefully, the exercise of implementing channels will be a useful

reminder to think of the concurrency abstractions that Haskell provides

(threads, transactional mutable variables) as primitives on top of which

you can build more interesting and useful abstractions. For a little

more fun, head over to Simon Marlow's

tutorial on parallelism and concurrency.

In this tutorial, Simon illustrates the building channels over MVar's

(also worth doing) and mentions an easy generalisation to multicast

channels (one write end, two read ends!) and also a small extension to

“unread” a value (pushing it back on to the read end). Both extensions

are easy on the surface, but hard to nail down to the exact desired

semantics (there's no known correct implementation of the unread

extension), at least when you're dealing with locks and MVar's. But if

you stick to transactions and TVar's, both wind up being

straightforward. Check out his tutorial!

Videos

Towards Haskell in the Cloud (17 Apr, 1 hour)

Simon Peyton Jones gave one of the keynote lectures at the recent ScalaDays 2012 in London. Simon sets the stage with some of the recent thinking about how functional programming and parallel programming fit together: that functional is the way forward, but no one single approach is going to cover all scenarios; that cost models are important to keep in mind; and that being able to explore all these different approaches within a single language is a great thing.

From here, Simon launches into the heart of his presentation, Cloud Haskell (Scala hackers, think Akka). Cloud Haskell was developed with distributed programming in mind, in other words, large scale, multiple machines, no shared memory. Simon's talk covers quite a bit of ground: the Erlang-style actor model as an explicit embrace of distributed memory; the Haskell typed channels twist; programmer-controlled serialisation with type classes; and finally the longtime big stumper, how we go about serialising functions. It's interesting stuff. And as a sort of side bonus, even if don't walk away with a better idea what Cloud Haskell is all about, you should at least get a concrete sense for how type classes work.

Blogs and packages

hpuns: parallel jeux de mots (plays on words)

HPuns is a valuable tool for making your future (French speaking) children's lives miserable. It generates awful puns from names, and in parallel, no less! Paul Brauner was just looking for some parallel “speedups out of the box.“ Where he was previously was able to get only a mediocre speedup with Strategies, with the monad-par package he was able to knock out a quick 1.6x speedup (-N3) over a lunchbreak. HPuns may just be a toy (one file!) but it could be what you're looking for if monad-par usage examples is what you're after.

Accelerate version 0.12: GPU computing with Haskell (14 May)

Manuel Chakravarty the recent 0.12 release of Accelerate, with full sharing recovery in scalar expressions and array computations, some more examples, and bug fixes. Accelerate should still be considered a beta release, but the folks at UNSW are hungry for early adopters. So do give it a try!

How to write hybrid CPU/GPU programs with Haskell (4 May)

If you like your monad-par and your Accelerate working together, consider this proposition from Ryan Newton: “What’s better than programming a GPU with a high-level, Haskell-embedded DSL (domain-specific-language)? Well, perhaps writing portable CPU/GPU programs that utilize both pieces of silicon…”

Ryan walks us through installing the recently released meta-par packages and writing some simple hybrid code. The general idea seems to be to define CPU and GPU implementations of a task and to allow the generalised work-stealing scheduler to choose between them; the promise being that if a program performs much better on one device (say the GPU), that device will wind up doing most of the work.

More generally, the meta-par family of packages is aimed at heterogeneous programming (it provides a mechanism for building parallel schedulers out of "mix-in" components). Ryan's post focuses on the CPU/GPU scenario, but there are also future packages coming our way for distributed programming too. If you are interested in learning more, have a look at the meta-par GitHub page and the the draft paper A Meta-Scheduler for the Par-Monad.

Mailing lists

Is protocol-buffers package maintainer reachable? (23 Apr)

Paul Graphov finds the protocol-buffers and hprotoc packages failing to build with the latest GHC. Unfortunately, he seems to be having trouble getting his patches to maintainer Chris Kuklewicz. The mailing list thread came up with suggestions for places we might get in touch with Chris, and also touched on a sort of recurring dilemma in the Haskell community: what do we do when package maintainers disappear? The general feeling seems to be that provided we can find a new maintainer, we should discuss it on the mailing list, then go ahead and take over.

Threads and hGetLine (28 Apr)

H.M. has a simplified scenario he's having trouble with: suppose you have thread waiting on input with

hGetLine, and you have a supervisor thread to close the handle and/or kill the first thread. How do you make it work? It seems thathCloseandkillThreaddo not work for H. because the first thread is blocking for input. Alvaro Gutierrez suggests perhaps using a different approach which doesn't involve killing threads, perhaps non-blocking IO and a synchronised condition variable.Perhaps H.M. is on Windows? Joey Adams points out that “GHC currently doesn't have proper IO manager support for Windows. On Windows, IO is performed through FFI calls. An FFI call masks asynchronous exceptions… If another thread tries to

killThreadthe thread waiting for input, the exception will not be received until the FFI call completes. This means both threads will hang.”

using ResourceT with MVars (2 May)

Warren Harris would like to use LevelDB (an open source on-disk key-value store inspired by BigTable). Unfortunately for Warren, the 0.0.3 version of leveldb-haskell switches from

get/putin theIOmonad toResourceT. This makes it tricky for him to write code likewithMVar state $ db -> do maybeValue <- get db rdOpts key put db wrOpts key $ maybe init incr maybeValueIn effect, the question is “how do I run MVar operations in ResourceT?” Michael Snoyman suggests the lifted-based package for exactly this. Lifted-base exports IO operations from the base library lifted to any instance of

MonadBaseorMonadBaseControl. Warren can use any of theControl.Concurrent.MVar.Liftedfunctions sinceResourceTis an instance ofMonadBaseControl

meaning of

__PARALLEL_HASKELL__and -parallel (13 Apr)Facundo Domínguez was wondering what the

__PARALLEL_HASKELL__CPP macro, and the-parallelflags stand for. Jost Berthold explains that they both relate to parallel versions of GHC on distributed memory platforms.

Can Haskell outperform C++? (6 May)

Janek S. has seen claims floating around the internet that Haskell programs can have comparable performance to C and C++ versions. He's also seen claims about the promise of functional programming for automatically parallelising the software. Is there any evidence for these claims? This is a tricky question to pin down, and one that is vulnerable to apple/orange comparisons (some suggestions in the thread, 1, 2, 3) or debates about what makes a programming language desirable. Janek's question sparked a fairly epic thread, maybe worth digging through if you're struggled with Haskell performance before; or are member of the shadowy Haskell propaganda committee. For parallel Haskellers, I'll pick out a handful of sub-topics that came up.

Ertugrul Söylemez makes a general comment to look at the bigger picture. While (currently) seldomly beats C/C++ for number crunching algorithms, “where [it] really gets beyond C and C++ is in concurrency. Multithreaded network servers written in Haskell are not only a lot easier to write, but they also perform better than well written server programs in C/C++. This is because Haskell's concurrency goes well with its parallel execution model, where in machine code you don't really have a notion of procedures. You have thunks and they can not only be executed in parallel, but they can also be moved between threads freely.”

As for automatic parallelisation, Simon Marlow points out that there isn't yet any fully implicit parallelism in Haskell. What Haskell does provide, on the other hand, is a “a guarantee that you can safely call any function in parallel… as long as the function does not have an IO type”. So while fully automatic parallelism isn't here (and may not ever be), you can at least take an arbitrary piece of Haskell code and use it with something like

parMap, or call it from mulitple threads, and expect some sort of speedup from it; and safely, with a fully deterministic result and no nasty concurrency bugs to worry about.Finally, showing how it can be tricky to performance can be quite tricky, Ryan Newton had an example in his monad-par repo of a (rather C-ish) aggressively optimised Haskell program that was 6⨉ slower than its counterpart and worse 9⨉ with the new LLVM backend (said backend has been helpful in other cases, though, eg. for Manuel Chakravarty). There's a happy ending behind this. After some digging, Ryan uncovered a clever math trick used in the OCaml standard library. Porting it to Haskell makes his program not only faster than OCaml and Scheme but 48% faster than C++ too! Looks like we'll be getting a library patch from Ryan soon. “The moral,” Ryan comments, “is that community members could do a great deal to help "Haskell" performance by simply microbenchmarking and optimizing library routines in base!”

StackOverflow and Reddit

This month saw quite a lot of activity on StackOverflow, largely from

user Clinton trying to

puzzle through STM and other concurrency issues. The STM and

atomicModifyIORef series of questions could be interesting, at the

very least, to see what sorts of things people wonder about when they

first run into Haskell concurrency.

General questions

- Identifying the current HEC for a function in Haskell

- Haskell parallel computation using an STArray

- Slowdown when using parallel strategies in Haskell

- Haskell parallel performance

- “Monad-friendly” event-based IO

- Haskell concurrency - is forkIO really nondeterministic?

- TimeoutManager uses tryPutMVar to put nothing

- Software transactional memory with a big, shared piece of data

STM

- How does TVar work?

- Container for Haskell TVars

- Updating two or more TVars atomically. Possible?

- TVar: Preventing starvation

- TVar: orElse

- Concurrent generic data structure without deadlocks or resource starvation

- Concurrent data structure guidelines

- “Wait-free” data in Haskell

atomicModifyIORef

- How does 'atomicModifyIORef' work?

- Concurrency using simple IORef?

- Atomic IO wrapper/laziness?

- Attempt at parallel

atomicModifyIORefimplementation - Concurrent data structure guidelines

- SPJ talk: Towards Haskell in the Cloud : haskell

- Revamped Haskell concurrency and parallelism libraries page: from parallel arrays to Cloud Haskell : haskell

Help and Feedback

If you'd like to make an announcement in the next Haskell Parallel

Digest, then get in touch with me, Eric Kow, at

parallel@well-typed.com. Please

feel free to leave any comments and feedback!