Need for speed

GHC produces pretty fast code by most standards. After Well-Typed put some development effort towards faster code it’s now even faster, with a reduction in runtime of 3-4%.

As a disclaimer, these numbers are based on nofib benchmarks. Actual benefits for individual programs can vary. However I’m confident that this will benefit any reasonably large program to some degree.

These changes were implemented over the summer by me (Andreas Klebinger) for Well-Typed. The changes will be in GHC 8.10 which is scheduled to be released early next year. So hopefully users won’t have to wait long to get these benefits.

So what did I change?

Here is a list of potentially user-facing changes as a result of this work. Not included are GHC internal changes which shouldn’t be user visible.

- Code layout redux: ~1-2% faster executables through better placement of code in binaries.

- Fixed a bug in the Windows linker.

- 20% smaller interface files through variable length encodings.

- Tune the number of precomputed Int closures: ~1% faster executables.

- Optimize case/switch statements better: ~1% faster executables. Much faster code in certain edge cases.

In the rest of this post, I will go over these changes in more detail …

1. Code layout redux

I already worked on code layout as part of Google Summer of Code last year. This year I picked this back up and improved it further.

Architecture of GHC’s code layout.

GHC’s pipeline has many stages, but relevant for code layout are the four below.

Cmm

v

Instruction Selection

v

Register Allocation

v

Code Layout- We start to think about code layout once we get to Cmm. Cmm is a C-like intermediate language which contains meta information about some branches indicating which branch of an if/else is more likely. We take this information and build an explicit control flow graph data structure from it.

- From there we perform instruction selection, which can introduce new basic blocks and assigns virtual registers. This can change control flow in certain cases.

- Register allocation can introduce new basic blocks as an implementation detail, but does not change control flow.

- And the last thing we do is place all basic blocks in a sequence.

For code layout much of the functionality I added can be viewed as two independent parts:

- Heuristics to estimate runtime behavior based on the control flow graph and code. Essentially we annotate the code with additional information at various stages and keep this information until code layout.

- An algorithm taking the annotated code as input in order to produce a performant code layout.

This year I focused almost exclusively on the first part: how to produce more accurate estimates of runtime behavior:

The second part was for the most part already implemented by me last year during Google Summer of Code. Last year, we tried to compute a good code layout quickly, based on estimates of how often each control flow path is taken.

The main goal in the second step is to minimize the number of jump instructions, while placing jump targets closer to the jump sources. This is a hard optimization problem, but good heuristics take us very far for this part.

Estimating runtime behavior – loops

Loops are important for two reasons:

- They are good predictors of runtime behavior.

- Most execution time is spent in loops.

Combined, this means identifying loops allows for some big wins. Not only can we do a better job at optimizing the code involving them. The code in question will also be responsible for most of the instructions executed making this even better.

Last year I made the native backend “loop aware”. In practice this meant GHC would perform strongly connected components (SCC) analysis on the control flow graph.

- This allowed us to identify blocks and control flow edges which are part of a loop.

- In turn this means we can optimize loops at the cost of non-looping code for a net performance benefit.

- However SCC can not determine loop headers, back edges or the nesting level of nested loops which means we miss out on some potential optimizations.

This meant we sometimes ended up placing the loop header in the middle of the loop code. As in code blocks would be laid out in order 2->3->1.

This isn’t as horrible as it sounds. Loops tend to be repeated many times and it only adds two jumps overhead at worst. But sometimes we bail out of loops early

and then the constant overhead matters. We also couldn’t optimize for inner loops as SCC is not enough to determine nested loops.

Nevertheless, being aware of loops at all far outweighed the drawbacks of this approach. As a result, this optimisation made it into GHC 8.8.

This year I fixed these remaining issues. Based on dominator analysis we can now not only determine if a block is part of a loop. We can also answer what loop it is, how deeply nested that loop is and determine the loop header.

As a consequence we can prioritize the inner most loops for optimizations, and can also estimate the frequency with which all control flow edges in a given function are taken with reasonable accuracy.

Estimating runtime behavior – more heuristics

The paper Static Branch Frequency and Program Profile Analysis by Youfeng Wu and James R. Larus contains a number of heuristics which can be used to estimate run time as well as an algorithm to compute global edge frequencies for a control flow graph. As far as I know this paper was based on work in/for gcc, however much of it was directly applicable to GHC with slight modifications.

In order for their approach to be usable we need to have certain meta-information about branches available, which is no longer inferable from the assembly code which GHC performs code layout on. For this reason we get the required information during the various pipeline stages and keep it around until we can use it for the code layout.

This allows us not only to implement heuristics from the paper, but also to implement GHC specific heuristics. For example we identify heap and stack checks which are easy to predict statically.

Results

Let’s start with the numbers:

| Benchmark suite | Runtime difference (lower is better) |

|---|---|

| nofib | −1.9% |

| containers | −1.1% |

| megaparsec | −1.1% |

This is a substantial improvement for the code generator!

Nofib is the default way to benchmark GHC changes.

In my experience containers and megaparsec respond very different to code layout changes. So seeing that this approach works well for both is nice to see.

2. Linker woes

GHC has its own linker implementation used to load static libraries for e.g. GHCi and TH on Windows.

Some offsets when doing linker things are limited to 32 bit which is not an issue, as linkers can work around this as long as the overflow is recognized. So obviously we check for this case. However the check was wrong, leading to runtime crashes in rare circumstances as jump offsets overflowed.

In the end this is a very rare error, usually only happening when at least TH and large packages are involved on Windows. So far I only know of one other person who ran into this issue. But it reliably occurred for me when working on GHC itself.

I identified the issue a while ago, but while running benchmarks I had time to write up a patch and fix it. It will be in 8.10 and will be backported to 8.8 as well.

For the curious

In detail the issue is that there are no 64bit relative jumps on x64. So if possible a linker will use a 32bit offset to the target location. If this is not feasible the linker has to work around this by jumping to another piece of code, which will then use an absolute jump to go to the destination.

This works, however the check was faulty so failed sometimes if the value was close to the end of the 32bit range.

If you are curious, here is the old (faulty) check, and the new (working) check.

// Old check

if ((v >> 32) && ((-v) >> 32))

// New check

if ((v > (intptr_t) INT32_MAX) || (v < (intptr_t) INT32_MIN))3. Smaller interface files

GHC stores a lot of Int/Int32/… numbers in interface files. Most of these however represent small values. In fact, most of the numbers we store

could fit into a single byte. So I changed the encoding to one which allows us to do so.

I wrote up the details a while ago so if you are interested check it out.

4. Precomputed Int closures

GHC uses a great hack to reduce GC overhead when dealing with Char or Int values.

Ömer Sinan Ağacan wrote about this in the past so I won’t reproduce the details here.

The short version is that a large number of Int and Char values will be close to zero. So during garbage collection GHC would replaces all heap allocated ASCII range Char and certain Int values with

references to ones built into the runtime.

This means during garbage collection we do not have to copy them speeding up collections. Further we can get away with one 1 :: Int value

instead of many. So we also reduce memory usage.

This is great, but the Int range was only from -16 to 16 which seemed quite small to me.

I tried a few things and settled on -16 to 255 as new range. The impact (based on nofib)

was surprisingly large. From the MR:

Effects as I measured them:

RTS Size: +0.1%

Compile times: -0.5%

Runtime nofib: -1.1%

Nofib overrepresents Int usage. But even GHC itself got 0.5% faster so I was quite happy with how this change turned out.

And there will be follow up work improving things further when I get

around to it.

5. Switch statements

Here I optimized two patterns.

Eliminating redundant switches

The simpler change is that if all branches are equal we can eliminate the switch entirely. This happens rarely as this pattern is usually eliminated earlier in the pipeline. But sometimes it only arises after certain optimizations have been applied so GHC will check for this later in the pipeline (at the Cmm stage) as well.

This pattern is fairly rare. However when it happens in an inner loop it can result in a massive performance improvement, so it’s good to patch this.

Avoid expression duplication

The other change was slightly more complex. For this we need to look at some Cmm code.

We start of with a Cmm switch statement like the one in this macro:

#define ENTER_(ret,x) \

again: \

W_ info; \

LOAD_INFO(ret,x) \

/* See Note [Heap memory barriers] in SMP.h */ \

prim_read_barrier; \

switch [INVALID_OBJECT .. N_CLOSURE_TYPES] \

(TO_W_( %INFO_TYPE(%STD_INFO(info)) )) { \

case \

IND, \

IND_STATIC: \

{ \

x = StgInd_indirectee(x); \

goto again; \

} \

case \

FUN, \

FUN_1_0, \

FUN_0_1, \

FUN_2_0, \

FUN_1_1, \

FUN_0_2, \

FUN_STATIC, \

BCO, \

PAP: \

{ \

ret(x); \

} \

default: \

{ \

x = UNTAG_IF_PROF(x); \

jump %ENTRY_CODE(info) (x); \

} \

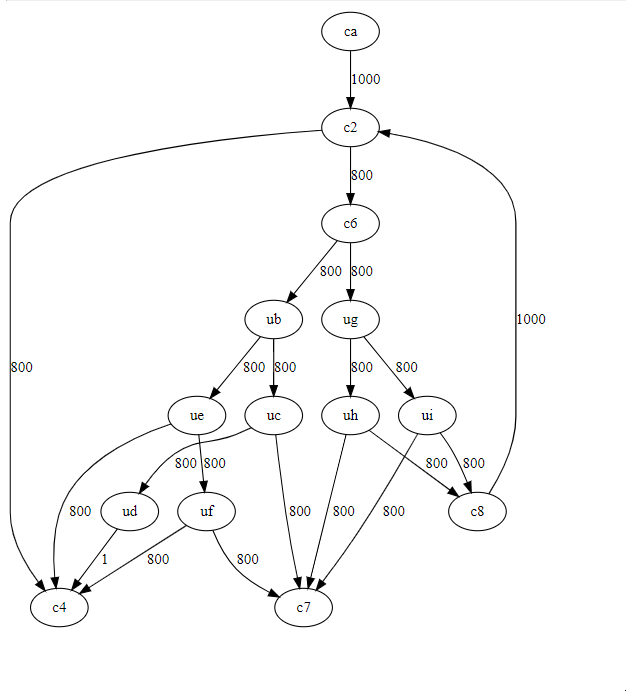

}GHC is fairly smart about how it compiles these kinds of constructs. In this case it will use a binary search tree as one can see in the control flow graph:

So far so good, however it also decides to inline the expression we branch on into each node.

Here is one snippet taken from GHC’s RTS where we duplicate I32[_c3::I64 - 8].

From a code size point of view this isn’t that bad, but we also duplicate the work

in each node of our binary search tree as a result. This is especially bad if we end up duplicating memory reads.

==================== Optimised Cmm ====================

stg_ap_0_fast() { // [R1]

{ []

}

{offset

...

c6: // global

_c3::I64 = I64[_c1::P64]; // CmmAssign

if (I32[_c3::I64 - 8] < 26 :: W32) goto ub; else goto ug; // CmmCondBranch

ub: // global

if (I32[_c3::I64 - 8] < 15 :: W32) goto uc; else goto ue; // CmmCondBranch

uc: // global

if (I32[_c3::I64 - 8] < 8 :: W32) goto c7; else goto ud; // CmmCondBranch

...

}

}If we go through four nodes in the search tree this means we perform 3 redundant loads from memory. Even when we hit L1 cache this still incurs a latency overhead of 15 cycles on my desktop machine.

For comparison if the branches get predicted correctly and we remove this overhead we should be able to process all 4 search tree nodes in 2-4 cycles total.

Only very rarely does GHC emit code following this pattern when compiling Haskell code. However GHC also supports compilation of hand written Cmm code. A feature heavily used heavily used by the RTS. Some of the more common macros used in the RTS lead to this pattern. As a consequence most, if not all programs will benefit from this change as the RTS will perform better.

For nofib it reduced runtime by 1.0% so definitely a meaningful improvement.

Conclusion

I think it’s great that GHC does not only add new features, but also keeps improving things for existing code. And I’m glad I was able to add some improvements myself for the community.